I’ve used coding agents throughout all of 2025, first with Cursor and now with Claude Code, and I haven’t had any real complaints. However, when I talked to other developers at conferences, I found that many were frustrated, distrustful of agents because of hallucinations and bugs, and some had given up entirely. I included a section about this in my talk about AI and React Native at App.js Conf 2025, because the gap between my experience and theirs felt worth exploring.

Better models have come out over the course of 2025, and the agents themselves have improved, but my workflow hasn’t really changed. The approach that worked for me early on still works now: plan your work before you start writing code.

Key Takeaways

- Plan before you code: Create a plan.md file with your AI agent before writing any code. This collaborative planning step prevents hallucinations, catches edge cases, and produces better results than jumping straight into implementation.

- Context engineering beats prompt engineering: Setting up the right environment for your AI agent (docs, constraints, architectural decisions) matters more than crafting the perfect prompt.

- Plans persist, chat history doesn't: A plan.md file acts as a shared reference that survives across sessions. Your architectural decisions, constraints, and reasoning compound over time instead of resetting.

- Planning before coding is a timeless principle: Developers who learned to plan before implementing (when iterations were expensive) may recognize this pattern faster. AI agents are new, but the principle is not.

- Refine iteratively, then execute: Each pass over your plan adds detail, catches edge cases, and checks assumptions against your actual codebase. Once the plan is solid, you can even execute it with multiple agents in parallel.

Two Ways to Work with AI

There are two main approaches to AI-assisted development. The first is vibe coding, where you dive straight into implementation. You start writing code with your agent immediately, iterate on the fly, and get quick results. The second is a plan-first approach, where you collaborate with your agent on a detailed plan before writing any code.

Vibe coding works when you just want to see something built quickly. You bounce ideas back and forth with your AI agent, iterate without much structure, and move fast. There’s a real dopamine hit when you see work that used to take hours get done in minutes. This approach is perfect for side projects, prototypes, or experiments where you want results without thinking deeply about the codebase.

Vibe coding makes it easy to turn your brain off. That’s fine for prototypes. But when you’re writing software that generates revenue, handles sensitive data, or affects real users at scale, it’s irresponsible to let go of critical thinking. Vibe coding falls short when quality matters because you end up with code that looks right but can have subtle bugs, or solutions that work in isolation but break assumptions elsewhere in the system.

A planning approach lets you work diligently with AI by building up context before implementation. This is where we make the conceptual jump from prompt engineering to context engineering. Prompt engineering focuses on crafting individual prompts to get better outputs. Context engineering is broader: it’s about setting up the entire contextual environment around your AI agent so it has what it needs before you ask it to do anything. Instead of packing all your requirements into a single prompt, you build up context over time through collaboration, and then you execute.

The plan.md approach

It’s easy to collaborate with an AI agent when you’re building up context together in a plan.md file. A plan.md file gives you three things that make AI collaboration significantly better.

First, it’s a collaborative space. You can break down problems, consider different approaches, document constraints, and iterate on solutions before writing any code. You can try out ideas and explore possibilities without commitment. And you can get the agent to write and iterate on the plan for you, with your steering. When the agent has all the context it needs, it doesn’t have to guess or fill in gaps with assumptions. That alone eliminates a huge category of hallucinations and bugs.

Second, it persists. Chat history fades or gets truncated. A plan.md file is like a hard disk. Your architectural decisions, constraints, edge cases, and reasoning stay accessible across your entire coding session. You can revisit them, refine them, and build on them. Your planning compounds rather than resets with each session.

Third, planning before coding has always been a good idea. I grew up as a developer back when we didn’t have fast refresh and had to wait for code to compile. Iterations were expensive, so you thought through your approach before touching the keyboard. Planning wasn’t optional; it was how you avoided wasting hours on dead ends. Vibe coding skips the planning step entirely. That’s fine for prototyping, but thinking before implementing is always going to produce better results, regardless of how fast your tools get.

The case for planning

Experienced developers have been making this point for decades. The advice shows up in programming proverbs, in classic software engineering books, and in the war stories of engineers who learned the hard way. The specific tools change, but the principle stays the same: time spent thinking through a problem is almost always less than time spent debugging a solution you didn’t fully understand.

Weeks of coding can save you hours of planning.

— Programming proverb

Steve Wozniak took this further than most. For much of his early career, he had to plan before building simply because he couldn’t afford the parts. As a teenager, he spent years designing computers entirely on paper, redesigning the same machine dozens of times before ever touching a soldering iron. He turned constraint into craft. Wozniak once said: “It’ll be worth every minute you spend alone at night, thinking and thinking about what it is you want to design or build. It’ll be worth it, I promise.”

The sooner you start to code, the longer the program will take.

— Roy Carlson, often cited as Carlson’s Law

If you learned to code when iterations were slow and expensive, the value of a plan.md probably feels obvious. If you started in an era of fast feedback loops and hot reloading, you might not have the same instinct. But the principle holds either way: giving your AI agent a complete picture of your constraints, goals, and architectural decisions up front leads to better outcomes than discovering problems one at a time during implementation.

Example Workflow

I recently needed to upgrade a React Native app from Expo SDK 45 to SDK 53. If you’ve worked with React Native, you know this kind of upgrade can take weeks or even months. Eight major SDK versions, React 17 to 19, React Native 0.68 to 0.79, a complete audio API replacement, dozens of breaking changes, deprecated APIs, and subtle compatibility issues. Most of the time isn’t spent writing code; it’s spent researching what changed, figuring out what breaks, and debugging issues you didn’t anticipate. This is where the plan.md approach really proves itself. Instead of diving in and discovering problems as I went, I started with a plan.md file and had the agent do the research first.

I need to upgrade my Expo app from SDK 45 to SDK 53. Create a plan.md that:

1. Lists all packages that need updating with current and target versions

2. Researches breaking changes for each major version jump

3. Identifies deprecated APIs I'm using that need migration

4. Provides specific code changes with before/after examples

5. Includes validation steps and rollback instructions

Search online for Expo SDK changelogs, React 19 migration guides, and any package-specific breaking changes. Check my codebase for usage of deprecated patterns.The agent researched breaking changes across all the version jumps, checked my codebase for deprecated patterns, and produced a comprehensive upgrade plan. The plan below covers the full upgrade path: a version matrix for every package, all breaking changes across eight SDK versions, step-by-step migration commands, code changes for the expo-av to expo-audio migration, and troubleshooting for common failures. It’s long because real upgrade plans are long. The point is that all of this research happened before I touched any code.

# Ongaku Mobile DIRECT Upgrade Plan - SDK 45 → 53 (No Incremental Steps)

## ⚡ STRATEGY: Direct Jump to Latest Versions WITH New Architecture

**We're going straight from SDK 45 to SDK 53 WITH the New Architecture enabled** - no incremental upgrades, maximum performance. This saves hours of work and gets us the latest stable versions with best performance immediately.

### Why New Architecture?

- **Better Performance**: Direct communication via JSI instead of bridge

- **Faster Startup**: TurboModules load on-demand

- **Type Safety**: Better TypeScript integration

- **Future-Proof**: Legacy architecture is frozen, no new features

- **SDK 53 Default**: It's the default anyway, we're just embracing it

## 📊 Current → Target Versions

| Package | Current | Target | Breaking Changes |

|---------|---------|--------|------------------|

| **expo** | 45.0.0 | **53.0.0** | Major: New Architecture ENABLED, expo-av deprecated |

| **react** | 17.0.2 | **19.0.0** | Automatic batching, stricter types |

| **react-native** | 0.68.2 | **0.79.0** | New Architecture, Metro changes |

| **@react-native-firebase** | 15.4.0 | **23.3.1** | Minor API updates only |

| **@react-navigation** | 6.x | **7.x** | Navigation API changes |

| **@reduxjs/toolkit** | 1.8.5 | **2.x** | Builder syntax required |

| **styled-components** | 5.3.5 | **6.x** | Transient props, no auto-prefixing |

| **expo-av** | 11.2.3 | **DELETE** → **expo-audio 2.0** | Complete API change |

| **TypeScript** | 4.3.5 | **5.x** | Stricter types |

## 🚨 ALL Breaking Changes (45→53)

### Expo SDK Breaking Changes

1. **expo-av completely removed** - Must migrate to expo-audio

2. **New Architecture enabled by default** in SDK 53 (we're keeping it ENABLED)

3. **Push notifications removed from Expo Go** (need dev build)

4. **Metro uses package.json exports** - May break deep imports

5. **Hermes required in Expo Go** (JSC no longer supported)

6. **expo-barcode-scanner removed** - Use expo-camera instead

7. **Constants.manifest deprecated** - Use Constants.expoConfig

8. **sentry-expo deprecated** - Use @sentry/react-native

9. **ProgressBarAndroid/ProgressViewIOS removed** from React Native

10. **Minimum iOS 13.4, Android SDK 23**

### New Architecture Specific Changes

1. **Fabric Renderer**: New UI rendering system

2. **TurboModules**: On-demand native module loading

3. **JSI (JavaScript Interface)**: Direct JS-to-native communication

4. **Bridgeless Mode**: No more async bridge

5. **Hermes Required**: JSC no longer supported in Expo Go

### React 17→19 Breaking Changes

1. **No ReactDOM.render** - Must use createRoot

2. **Automatic batching** - All updates batched

3. **StrictMode runs effects twice** in development

4. **defaultProps deprecated** - Use default parameters

5. **PropTypes removed** - Use TypeScript

### Package-Specific Breaking Changes

- **React Navigation 7**: navigationInChildEnabled required for nested navigation

- **styled-components 6**: No auto vendor prefixing, use transient props ($prefix)

- **Redux Toolkit 2**: Builder callback required for extraReducers

- **Firebase**: Already native ✅ (minimal changes)

## Step 1: Pre-Flight Checklist (5 minutes)

```bash

cd /Users/rmendiola/code/me/ongaku/ongaku-mobile

# CRITICAL: Verify Node version (must be 18+)

node --version # You have v23.3.0 ✅

# Check for uncommitted changes - MUST be clean

git status

# Create upgrade branch

git checkout -b direct-sdk-53-upgrade

git add -A && git commit -m "Pre SDK 53 upgrade snapshot"

# Save current state for reference

npm list --depth=0 > deps-before.txt

npx jest --listTests > tests-before.txt

# Check for patches that might break

ls -la patches/ 2>/dev/null || echo "No patches (good)"

grep -E "overrides|resolutions" package.json || echo "No overrides (good)"

```

## Step 2: Nuclear Clean (2 minutes)

```bash

# Stop everything

watchman watch-del-all 2>/dev/null || true

kill $(lsof -t -i:8081) 2>/dev/null || true

kill $(lsof -t -i:19000) 2>/dev/null || true

# Delete EVERYTHING

rm -rf node_modules

rm -rf ios/Pods ios/build

rm -rf android/build android/app/build android/.gradle

rm -rf $HOME/.gradle/caches/

rm package-lock.json yarn.lock

rm -rf .expo

rm -rf $TMPDIR/metro-* $TMPDIR/haste-*

# Clear all caches

npm cache clean --force

cd ios && pod cache clean --all 2>/dev/null || true && cd ..

```

## Step 3: Direct SDK 53 Upgrade with New Architecture (10 minutes)

```bash

# Install latest Expo CLI

npm install -g expo@latest

# DIRECT upgrade to SDK 53 (New Architecture is default)

npx expo upgrade 53

# This will update:

# - expo to ~53.0.0

# - react to ^19.0.0

# - react-native to 0.79.x

# - All expo packages to SDK 53 compatible versions

# Install with force (React 19 has peer conflicts)

npm install --force

# Install missing/updated packages

npx expo install expo-audio@~2.0.0

npm uninstall expo-av # Remove deprecated package

# Update other major packages to latest

npm install @react-navigation/native@^7.0.0 \

@react-navigation/bottom-tabs@^7.0.0 \

@react-navigation/native-stack@^7.0.0 \

@reduxjs/toolkit@^2.0.0 \

styled-components@^6.0.0 \

@react-native-firebase/app@latest \

@react-native-firebase/analytics@latest \

--force

# Update dev dependencies

npm install --save-dev @types/react@^19.0.0 \

@types/react-native@^0.79.0 \

typescript@^5.0.0 \

--force

```

## Step 4: Fix Breaking Code Changes & New Architecture Compatibility (20 minutes)

### 4.0: Verify New Architecture Compatibility

```bash

# Check if any code uses direct bridge access (unlikely in this app)

grep -r "NativeModules" --include="*.ts" --include="*.tsx" --include="*.js" . || echo "No direct NativeModules usage (good)"

grep -r "requireNativeComponent" --include="*.ts" --include="*.tsx" --include="*.js" . || echo "No custom native components (good)"

```

### 4.1: Audio Migration (CRITICAL)

```typescript

// File: /Users/rmendiola/code/me/ongaku/ongaku-mobile/logic/playback.ts

// REPLACE ENTIRE FILE with new expo-audio implementation:

import { Audio, AudioPlayer } from 'expo-audio';

import { pitchesToPitchFiles } from './pitchesToPitchFiles';

import { store } from '../redux/store';

import {

addPlayingPitch,

removePlayingPitch,

setPlayingChord,

clearPlayback,

} from '../redux/playbackSlice';

import { PitchFile } from '../constants/piano';

const audioPlayers: Record<string, AudioPlayer> = {};

export const playPitch = async (pitchFile: PitchFile) => {

const { playbackSource, pitch } = pitchFile;

try {

let player = audioPlayers[pitch];

if (!player) {

// Create new player

player = new AudioPlayer({

source: playbackSource,

shouldCorrectPitch: false,

volume: 1.0,

});

// Listen for completion

player.addListener('playbackStatusUpdate', (status) => {

if (status.isLoaded && status.didJustFinish) {

store.dispatch(removePlayingPitch({ pitch }));

// Reset for replay

player.seekTo(0).catch(() => {});

}

});

audioPlayers[pitch] = player;

} else {

// Reset if replaying

await player.seekTo(0);

}

store.dispatch(addPlayingPitch({ pitch }));

await player.playAsync();

} catch (error) {

console.error('Error playing pitch:', pitch, error);

store.dispatch(removePlayingPitch({ pitch }));

}

};

export const playChord = async (

chord: string,

pitches: string[],

arpeggio: boolean = false

) => {

store.dispatch(clearPlayback());

store.dispatch(setPlayingChord({ chord, pitches, arpeggio }));

const pitchFiles = pitchesToPitchFiles(pitches);

if (arpeggio) {

for (let i = 0; i < pitchFiles.length; i++) {

await playPitch(pitchFiles[i]);

if (i < pitchFiles.length - 1) {

await new Promise(resolve => setTimeout(resolve, 100));

}

}

} else {

await Promise.all(pitchFiles.map(playPitch));

}

};

export const stopAllSounds = async () => {

await Promise.all(

Object.values(audioPlayers).map(p => p.pauseAsync().catch(() => {}))

);

store.dispatch(clearPlayback());

};

export const cleanupAudio = async () => {

await Promise.all(

Object.values(audioPlayers).map(p => p.release().catch(() => {}))

);

Object.keys(audioPlayers).forEach(key => delete audioPlayers[key]);

};

```

### 4.2: Fix Music Theory Test

```typescript

// File: /Users/rmendiola/code/me/ongaku/ongaku-mobile/logic/stepsToIntervals.tsx

// FIX: Return n-1 intervals for n notes

export const stepsToIntervals = (steps: number[]): string[] => {

const intervals: string[] = [];

// Bug fix: Only iterate to length-1

for (let i = 0; i < steps.length - 1; i++) {

const interval = steps[i + 1] - steps[i];

intervals.push(INTERVALS[interval] || `+${interval}`);

}

return intervals; // Now returns 3 intervals for 4-note chord

};

```

### 4.3: React Navigation 7 Updates

```typescript

// If you have any navigation.navigate to nested screens:

// OLD: navigation.navigate('ScreenInNestedNavigator')

// NEW: Add to NavigationContainer if needed:

<NavigationContainer navigationInChildEnabled>

{/* your navigators */}

</NavigationContainer>

```

### 4.4: styled-components 6 Updates

```typescript

// Check for any $as or $forwardedAs props:

// OLD: <StyledComponent $as="button" />

// NEW: <StyledComponent as="button" />

// For vendor prefixing (if needed for old browsers):

import { StyleSheetManager } from 'styled-components';

// Wrap your app:

<StyleSheetManager enableVendorPrefixes>

<App />

</StyleSheetManager>

```

### 4.5: Redux Toolkit 2 Updates

```typescript

// Check any createSlice files for object syntax in extraReducers

// OLD: extraReducers: { [action.type]: reducer }

// NEW: Must use builder callback:

extraReducers: (builder) => {

builder.addCase(action, reducer);

}

```

### 4.6: Remove Deprecated Patterns

```bash

# Find and fix deprecated patterns

grep -r "defaultProps" components/ screens/ logic/ || echo "No defaultProps (good)"

grep -r "PropTypes" components/ screens/ logic/ || echo "No PropTypes (good)"

grep -r "Constants.manifest" . || echo "No manifest usage (good)"

grep -r "sentry-expo" . || echo "No sentry-expo (good)"

```

## Step 5: Update app.json Configuration (2 minutes)

```json

// File: /Users/rmendiola/code/me/ongaku/ongaku-mobile/app.json

{

"expo": {

// ... existing config ...

// Enable New Architecture (it's default in SDK 53 anyway):

"experiments": {

"newArchEnabled": true // ENABLED for best performance

},

// Remove if present:

// "hooks": { ... } // Removed in SDK 51

// Update iOS config (merge with existing ios section):

"ios": {

// ... existing config ...

"deploymentTarget": "13.4", // Minimum for SDK 53

// For audio background playback (if needed):

"infoPlist": {

"UIBackgroundModes": ["audio"]

}

}

}

}

```

## Step 6: Rebuild Native Projects (5 minutes)

```bash

# Clean prebuild with new configuration

npx expo prebuild --clean --npm

# iOS specific fixes

cd ios

# Remove Flipper if present

sed -i '' '/:flipper_configuration/d' Podfile 2>/dev/null || true

# Update pods

pod install --repo-update

cd ..

# Android specific fixes

cd android

# Clean gradle

./gradlew clean

./gradlew --stop

# Enable New Architecture

grep "newArchEnabled" gradle.properties || echo "newArchEnabled=true" >> gradle.properties

# If it exists but is false, update it:

sed -i '' 's/newArchEnabled=false/newArchEnabled=true/g' gradle.properties 2>/dev/null || true

cd ..

```

## Step 7: Verify New Architecture & Everything Works (10 minutes)

```bash

# 1. Run doctor to check for issues (including New Architecture compatibility)

npx expo-doctor

# 2. Verify New Architecture is enabled

grep "newArchEnabled" android/gradle.properties # Should show: newArchEnabled=true

grep "newArchEnabled" app.json # Should show: "newArchEnabled": true

# 3. Check critical dependencies installed correctly

npm list expo react react-native @react-native-firebase/app expo-audio

# 4. Start Metro to test basic functionality

npx expo start --clear

# Press 'i' for iOS or 'a' for Android to test

# 5. Run tests

npm test -- --watchAll=false 2>&1 | tee test-results.txt

# 6. Check for deprecation warnings

npx expo start 2>&1 | grep -i "deprecated" || echo "No deprecations!"

```

## Step 8: Manual Testing Checklist

- [ ] App launches without crash (with New Architecture)

- [ ] Verify in logs: "Fabric" and "TurboModule" messages appear

- [ ] Play single note (tests expo-audio)

- [ ] Play chord (tests multiple audio)

- [ ] Play arpeggio (tests sequential audio)

- [ ] Change root note (tests state management)

- [ ] Navigate between screens (tests React Navigation 7)

- [ ] Check Firebase events in console

- [ ] Verify styled-components rendering correctly

## 🚫 Common Issues & Immediate Fixes

### New Architecture Specific Issues

#### Issue: "Library X doesn't support New Architecture"

```bash

# Most libraries work with interop layer in SDK 53

# If a library truly doesn't work, it likely needs updating:

npm list [problematic-package]

# Check for updates:

npm outdated [problematic-package]

# Update to latest:

npm install [problematic-package]@latest --force

```

#### Issue: Performance seems worse

```bash

# This is usually due to development mode

# Test in production mode:

npx expo start --no-dev --minify

# Or build a production version:

eas build --platform ios --profile preview

```

### Issue: "Cannot find module 'expo-av'"

```bash

# You forgot to update imports

grep -r "expo-av" --include="*.ts" --include="*.tsx" --include="*.js"

# Replace all with 'expo-audio'

```

### Issue: Metro "Unable to resolve module"

```bash

rm -rf $TMPDIR/metro-* $TMPDIR/haste-*

npx expo start --clear

```

### Issue: "Peer dependency conflicts"

```bash

npm install --force

# or if that fails:

npm install --legacy-peer-deps

```

### Issue: iOS build fails with pod errors

```bash

cd ios

pod deintegrate

rm -rf ~/Library/Caches/CocoaPods

pod install --repo-update

cd ..

```

### Issue: Android build fails

```bash

cd android

rm -rf ~/.gradle/caches/

./gradlew clean

./gradlew --stop

cd ..

npx expo prebuild --clean

```

### Issue: TypeScript errors

```bash

# Update tsconfig.json

npx tsc --init # Generate fresh config

# Or just fix errors shown by:

npx tsc --noEmit

```

### Issue: New Architecture specific errors

```bash

# "TurboModules are enabled, but mTurboModuleRegistry hasn't been set"

# Fix: Clean and rebuild

rm -rf ios/build/generated

cd ios && pod install && cd ..

npx expo prebuild --clean

# "Fabric renderer not found"

# Fix: Ensure Hermes is enabled (it should be by default)

grep "hermesEnabled" android/gradle.properties || echo "hermesEnabled=true" >> android/gradle.properties

```

## ✅ Success Validation

```bash

# All of these should pass:

npx expo-doctor # ✓ No issues

npm test -- --watchAll=false # ✓ Tests pass (except known issues)

npx expo start --no-dev --minify # ✓ Production build works

```

## 🔄 Emergency Rollback

```bash

# If everything breaks:

git checkout main

git branch -D direct-sdk-53-upgrade

rm -rf node_modules ios/Pods android/build

npm install

npx expo prebuild --clean

# You're back to SDK 45

```

## Time Estimate

- **Total: 45-60 minutes** for complete upgrade

- Step 1-2: 7 minutes (cleanup)

- Step 3: 10 minutes (installation)

- Step 4: 20 minutes (code fixes)

- Step 5-6: 7 minutes (configuration)

- Step 7-8: 10 minutes (verification)

## Notes

- **NO incremental upgrades** - We go directly to SDK 53

- **New Architecture ENABLED** - Maximum performance, it's the future

- **expo-audio is simpler** than expo-av - fewer features but more stable

- **Keep native folders** - You have Firebase configuration there

- **React 19 is stricter** - May need to fix more TypeScript errors

- **75% of SDK 52+ apps** already use New Architecture successfullyThe plan captured every breaking change I would have discovered the hard way. The expo-av to expo-audio migration alone would have taken hours to figure out mid-upgrade. Instead, I had the exact API changes documented before I started. The agent researched the changelogs, found the migration patterns, and verified which deprecated APIs I was actually using in my codebase.

This is what I mean by pre-doing the work. The research and planning that would normally happen in frustrating debugging sessions happens upfront, in the plan. When I finally ran the upgrade commands, I already knew what would break and how to fix it.

Refining Your Plan

Collaborating with an agent on a plan means you can refine it iteratively. Ask the agent to verify the plan against your codebase, then ask for another pass. Each pass adds more detail, catches more edge cases, and clarifies ambiguity.

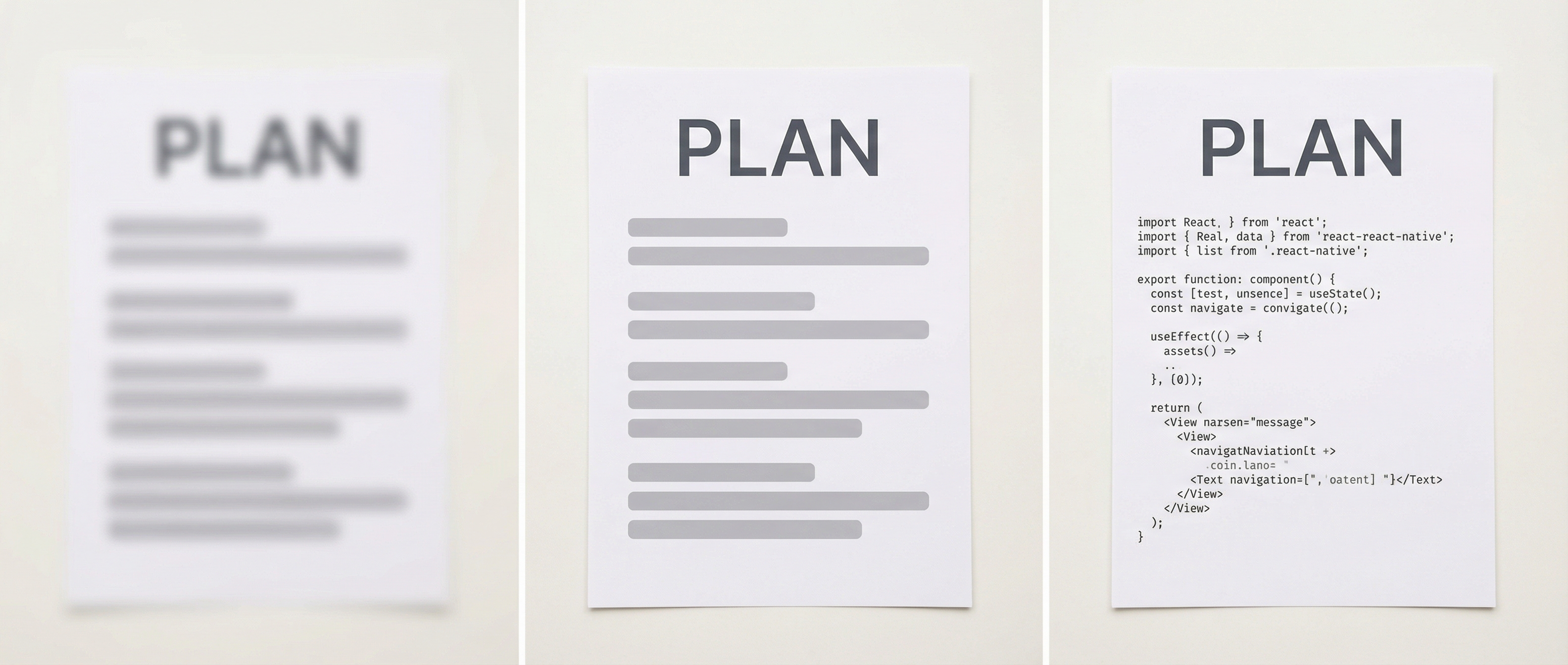

The plan gets clearer and more accurate with each iteration, like a diffusion model creating an image. If you’re not familiar with diffusion models, they’re the AI systems behind image generators like Midjourney and DALL-E. They start with pure noise and gradually refine it through multiple passes until you have a clear picture. Your plan works the same way: start rough, refine iteratively, verify against reality. Each pass checks against your actual codebase, so the refinement stays grounded in what exists rather than what the agent imagines might exist.

AI-generated image Show prompt

This image features a triptych composition, displaying three vertical panels that illustrate a progression of clarity and detail from left to right. The background across all three sections is a soft, neutral off-white surface, giving the impression of a clean workspace. In the leftmost panel, the image is heavily out of focus. A rectangular shape resembling a piece of paper is visible, but the details are obscured by a strong blur effect. The word PLAN can be faintly made out at the top in a large font, but the text underneath is nothing more than indistinct grey blobs, representing a vague or unformed concept. The center panel presents a clearer but still stylized version of the same document. Here, the paper is in focus, and the word PLAN is crisp and clearly legible in a dark grey, sans-serif typeface. However, the content beneath the title is abstract; instead of actual words, there are horizontal grey bars with rounded edges. These bars mimic the layout of a paragraph or a list, serving as a placeholder or wireframe that suggests the structure of the text without revealing specific information. This stage visually represents a draft or a structural outline. The rightmost panel shows the final, fully detailed iteration. The paper is sharp, and the abstract bars from the middle panel have been replaced with actual React Native code. Below the PLAN header, the text defines a functional component with hooks like useState and useEffect, imports from react-native such as View, Text, and StyleSheet, and includes a simple navigation setup. This progression from blur to wireframe to code effectively visualizes the evolution of a project from a hazy idea to a technical implementation.

You don’t have to do every refinement pass manually. I use a /plan-more command (it lives in .claude/commands/) that systematically explores my codebase and grounds each task in real file paths, line numbers, and code patterns. It’s not that the command is more effective than just asking for refinement in chat. It’s that I run it so often that having it as a slash command saves time. You can run /plan-more over and over until the plan feels detailed enough to execute.

Keep iterating on plan.md. Check your assumptions against the codebase. Make sure that each step is clear and actionable, with code snippets showing the exact changes.Here’s what that looks like using a task from the Expo upgrade. You start with a plan.md that has a vague task:

## styled-components Migration

Upgrade styled-components from v5 to v6 and fix any breaking changes.

- Update package version

- Fix deprecated patterns

- Test components still render correctlyRunning /plan-more tells the agent to explore the codebase and refine vague tasks into concrete steps. The agent scans for actual styled-components usage and produces something any developer can pick up and execute:

## styled-components Migration (v5 → v6)

Files: components/PianoKey.tsx, components/ChordDisplay.tsx, screens/HomeScreen.tsx

### 1. Update transient props

3 components pass custom props to styled() wrappers without the $ prefix. In v6, these leak to the DOM and cause React warnings.

```tsx

// components/PianoKey.tsx (lines 45-62)

// Before:

const Key = styled.TouchableOpacity<{ isActive: boolean }>`

background: ${props => props.isActive ? '#fff' : '#000'};

`;

<Key isActive={playing} />

// After:

const Key = styled.TouchableOpacity<{ $isActive: boolean }>`

background: ${props => props.$isActive ? '#fff' : '#000'};

`;

<Key $isActive={playing} />

```

Same pattern for `isPlaying` in ChordDisplay.tsx (line 28) and `highlightColor` in HomeScreen.tsx (line 91).

### 2. Update ThemeProvider import

v6 moves ThemeProvider to a separate entry point for React Native.

```tsx

// App.tsx (line 4)

// Before:

import { ThemeProvider } from 'styled-components';

// After:

import { ThemeProvider } from 'styled-components/native';

```

### Validation

```bash

npx tsc --noEmit && npm test -- --grep "styled"

```The command found three specific components with transient prop issues and an import path that moved. Those are the kinds of things you’d discover one at a time during debugging. With /plan-more, they surface before you touch any code.

Parallel execution

Once your plan is detailed enough, you can structure it for parallel execution by multiple agents. The idea is to craft your plan.md with a topology that identifies independent workstreams, tasks that don’t depend on each other and can run simultaneously.

Tools like Claude Code and Cursor let you launch sub-agents for different parts of your plan. One agent handles the package upgrades while another migrates the audio code. Each agent reads the same plan.md, inherits the same architectural decisions, and works within the same constraints you’ve documented.

Implement plan.md using multiple agents in parallel. Make sure the agents work on independent sections so they don't conflict with each other.Structuring your plan for parallelism means thinking about dependencies up front. Which tasks can run independently? Which ones need to finish before others can start? Documenting this in your plan.md turns it into a project execution map, not just a list of things to do.

The plan.md approach gets you about 80% of the way to a finished implementation. The remaining 20%, the small adjustments, edge cases you discover during testing, integration glue between parallel workstreams, is where vibe coding shines. Plan first, then iterate fast. The two approaches complement each other.

Building up your project’s context

Your plan.md files are temporary by design. They solve the problem in front of you and then become history. But the learnings embedded in those plans shouldn’t disappear.

After you finish a significant piece of work, ask your agent to turn the plan.md into permanent documentation. Create a docs/ folder with markdown files explaining your patterns, architectural decisions, and the reasoning behind them. A plan for migrating your authentication system becomes docs/auth-architecture.md. A plan for setting up your test infrastructure becomes docs/testing-patterns.md.

Turn plan.md into reference documentation in the docs/ folder. Focus on the patterns and decisions, not the step-by-step commands.Reference these docs in your CLAUDE.md with instructions like “When working with audio playback, refer to docs/audio-patterns.md” or “Before upgrading Expo SDK, read docs/expo-upgrade-guide.md.” Your agent will read these files automatically and start with the context you’ve built up over time.

This is the shift from prompt engineering to context engineering that I mentioned earlier. Instead of crafting the perfect prompt each time, you build up an environment of docs, constraints, and architectural decisions that your agent can draw from. The context compounds over time. Each plan you turn into documentation makes your next plan better.

A lot of the new features coming out with AI agents (Plan Mode, slash commands, custom skills) are packaging of this basic principle: have a file somewhere that your agent can refer to for building context. These features are conveniences, not new capabilities. You could have been doing this the entire time with plain markdown files.

When you invest in context, every interaction with your agent gets better, not just the next one.

Conclusion

The developers I’ve talked to who struggle with AI agents share a common pattern: they’re vibe coding. They give the agent minimal context, expect it to guess their intent, and get frustrated when it doesn’t. They go back and forth with the agent, each of them getting more lost, until someone gives up.

Remember what LLMs actually are: prediction machines. Given a string, they predict what string should come next. The more context you give up front, the tighter and more accurate the prediction. A plan.md file gives the agent enough context to make good decisions about what code to write and what to change. And here’s the thing: you don’t have to write all that context yourself. You can collaborate with the agent to build it. Ask the agent to investigate your codebase, research the problem, and draft the plan. Then refine it together. The agent does the legwork; you steer.

Start with your next non-trivial feature. Before you ask your agent to implement anything, ask it to help you create a plan. Describe what you’re trying to build, the constraints you’re working within, and the decisions you’ve already made. Let the agent research what it doesn’t know. Refine the plan until every step is concrete and actionable. Then execute.

You’ll spend more time planning than you’re used to. But you’ll spend far less time debugging, backtracking, and fixing the subtle bugs that come from an agent that didn’t have the full picture. The plan pays for itself every time.